What is Docker ?

So a developer can build a container having different applications installed on it and give it to the QA team. Then the QA team would only need to run the container to replicate the developer’s environment.

How Docker works

Docker works by providing a standard way to run your code. Docker is an operating system for containers. Similar to how a virtual machine virtualizes (removes the need to directly manage) server hardware, containers virtualize the operating system of a server. Docker is installed on each server and provides simple commands you can use to build, start, or stop containers

Why use Docker

Using Docker lets you ship code faster, standardize application operations, seamlessly move code, and save money by improving resource utilization. With Docker, you get a single object that can reliably run anywhere. Docker's simple and straightforward syntax gives you full control. Wide adoption means there's a robust ecosystem of tools and off-the-shelf applications that are ready to use with DockerSHIP MORE SOFTWARE FASTER

SEAMLESSLY MOVE

SAVE MONEY

When to use Docker

MICROSERVICES

CONTINUOUS INTEGRATION & DELIVERY

CONTAINERS AS A SERVICE

running multiple virtual machines lead to unstable performance, boot up process would usually take a long time and VM’s would not solve the problems like portability, software updates, or continuous integration and continuous delivery.

These drawbacks led to the emergence of a new technique called Containerization. Now let me tell you about Containerization

Containerization

Containerization is a type of Virtualization which brings virtualization to the operating system level. While Virtualization brings abstraction to the hardware, Containerization brings abstraction to the operating system.

Moving ahead, it’s time that you understand the reasons to use containers.

Reasons to use Containers

Following are the reasons to use containers:

- Containers have no guest OS and use the host’s operating system. So, they share relevant libraries & resources as and when needed.

- Processing and execution of applications are very fast since applications specific binaries and libraries of containers run on the host kernel.

- Booting up a container takes only a fraction of a second, and also containers are lightweight and faster than Virtual Machines.

Now, that you have understood what containerization is and the reasons to use containers, it’s the time you understand our main concept here.

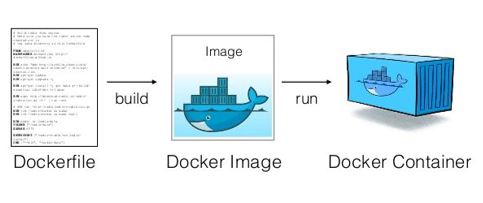

Dockerfile, Images & Containers

Dockerfile, Docker Images & Docker Containers are three important terms that you need to understand while using Docker.

As you can see in the above diagram when the Dockerfile is built, it becomes a Docker Image and when we run the Docker Image then it finally becomes a Docker Container.

Refer below to understand all the three terms.

Dockerfile: A Dockerfile is a text document which contains all the commands that a user can call on the command line to assemble an image. So, Docker can build images automatically by reading the instructions from a Dockerfile. You can use docker build to create an automated build to execute several command-line instructions in succession.

Docker Image: In layman terms, Docker Image can be compared to a template which is used to create Docker Containers. So, these read-only templates are the building blocks of a Container. You can use docker run to run the image and create a container.

Docker Images are stored in the Docker Registry. It can be either a user’s local repository or a public repository like a Docker Hub which allows multiple users to collaborate in building an application..

Docker Container: It is a running instance of a Docker Image as they hold the entire package needed to run the application. So, these are basically the ready applications created from Docker Images which is the ultimate utility of Docker

Docker Compose

Docker Compose is a YAML file which contains details about the services, networks, and volumes for setting up the application. So, you can use Docker Compose to create separate containers, host them and get them to communicate with each other. Each container will expose a port for communicating with other containers

With this, we come to an end to the theory part of this Docker Explained blog, wherein you must have understood all the basic terminologies.

In the Hands-On part, I will show you the basic commands of Docker, and tell you how to create a Dockerfile, Images & a Docker Container

Docker installation On Ubuntu 20.04

Set up the repository

Update the

aptpackage index and install packages to allowaptto use a repository over HTTPS:$ sudo apt-get update $ sudo apt-get install \ ca-certificates \ curl \ gnupg \ lsb-releaseAdd Docker’s official GPG key:

$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpgUse the following command to set up the stable repository. To add the nightly or test repository, add the word

nightlyortest(or both) after the wordstablein the commands below.$ echo \ "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

Install Docker Engine

Update the

aptpackage index, and install the latest version of Docker Engine and containerd, or go to the next step to install a specific version:$ sudo apt-get update $ sudo apt-get install docker-ce docker-ce-cli containerd.io$ docker info # To verify that Docker Demaon is running on your machine#Verify that Docker Engine is installed correctly by running the

hello-worldimage.$ sudo docker run hello-world

Hands-On

But Docker also gives you the capability to create your own Docker images, and it can be done with the help of Docker Files. A Docker File is a simple text file with instructions on how to build your images.

The following steps explain how you should go about creating a Docker File.

Step 1 − Create a file called Dockerfile and edit it using vi

. Please note that the name of the file has to be "Dockerfile" with "D" as capital.

Step 2 − Build your Dockerfile using the following instructions.

FROM ubuntu:latest

MAINTAINER Joe Ben Jen200@icloud.com

RUN apt-get update

RUN apt-get install -y nginx

ENTRYPOINT ["/usr/sbin/nginx","-g","daemon off;"]

EXPOSE 80The following points need to be noted about the above file −

The first line "#This is a sample Image" is a comment. You can add comments to the Docker File with the help of the # command

The next line has to start with the FROM keyword. It tells docker, from which base image you want to base your image from. In our example, we are creating an image from the ubuntu image.

The next command is the person who is going to maintain this image. Here you specify the MAINTAINER keyword and just mention the email ID.

The RUN command is used to run instructions against the image. In our case, we first update our Ubuntu system and then install the nginx server on our ubuntu image.

ENTRYPOINT: Specifies the command which will be executed first

EXPOSE: Specifies the port on which the container is exposed

Once you are done with that, just save the file.

Build the Dockerfile using the below command

Step 3 − Save the file. In the next chapter, we will discuss how to build the image

docker build -t dockerboss-app .

$ docker Images # to show the docker image that was just built#

Then run the image

- docker –version

- docker pull

- docker run

- docker ps

- docker ps -a

- docker exec

- docker stop

- docker kill

- docker commit

- docker login

- docker push

- docker images

- docker rm

- docker rmi

- docker build

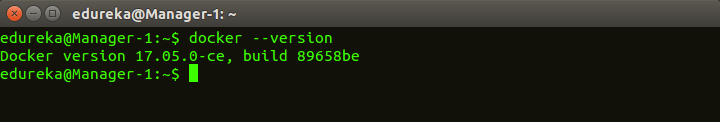

1. docker –version

Usage: docker — — version

This command is used to get the currently installed version of docker

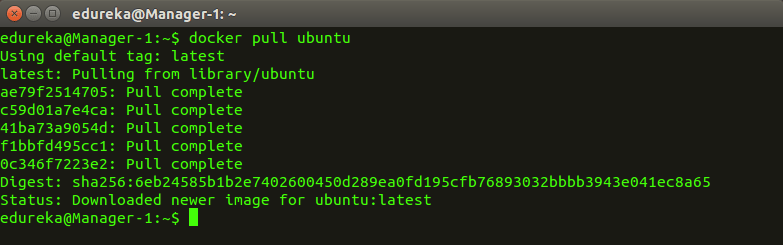

2. docker pull

Usage: docker pull <image name>

This command is used to pull images from the docker repository(hub.docker.com)

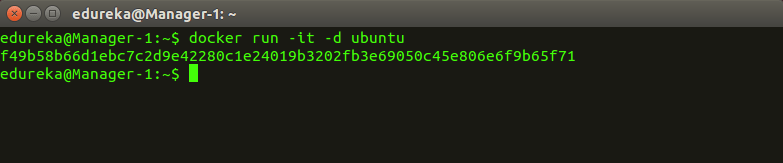

3. docker run

Usage: docker run -it -d <image name>

This command is used to create a container from an image

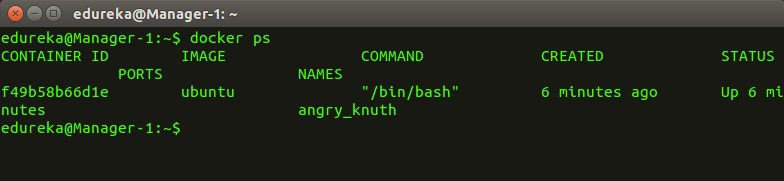

4. docker ps

Usage: docker ps

This command is used to list the running containers

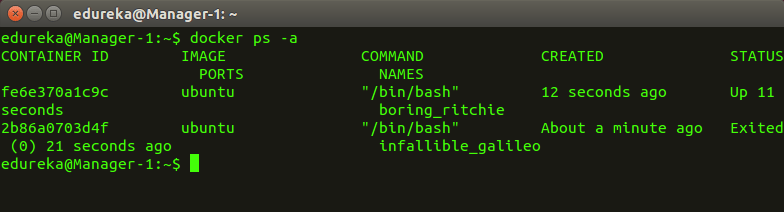

5. docker ps -a

Usage: docker ps -a

This command is used to show all the running and exited containers

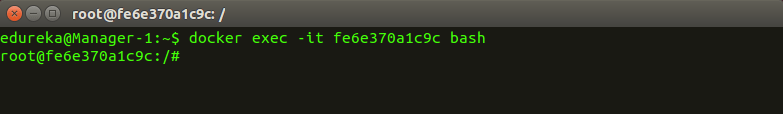

6. docker exec

Usage: docker exec -it <container id> bash

This command is used to access the running container

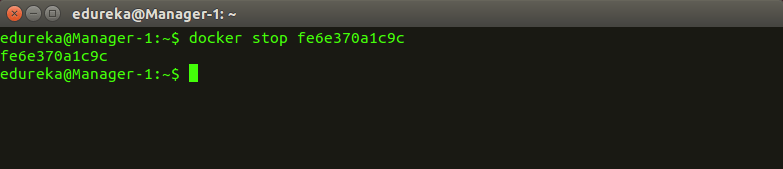

7. docker stop

Usage: docker stop <container id>

This command stops a running container

8. docker kill

Usage: docker kill <container id>

This command kills the container by stopping its execution immediately. The difference between ‘docker kill’ and ‘docker stop’ is that ‘docker stop’ gives the container time to shutdown gracefully, in situations when it is taking too much time for getting the container to stop, one can opt to kill it.

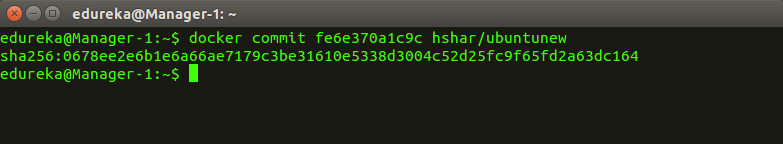

9. docker commit

Usage: docker commit <conatainer id> <username/imagename>

This command creates a new image of an edited container on the local system

10. docker login

Usage: docker login

This command is used to login to the docker hub repository

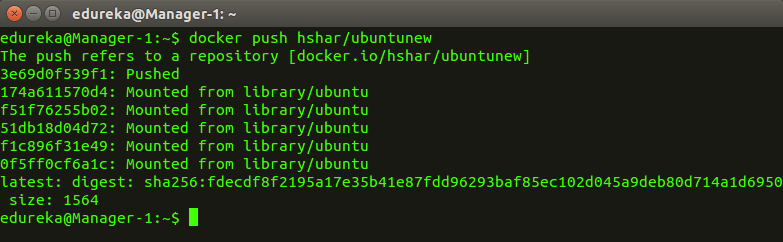

11. docker push

Usage: docker push <username/image name>

This command is used to push an image to the docker hub repository

12. docker images

Usage: docker images

This command lists all the locally stored docker images.

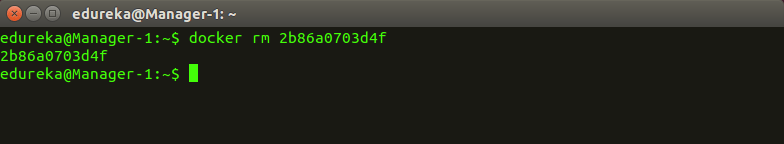

13. docker rm

Usage: docker rm <container id>

This command is used to delete a stopped container.

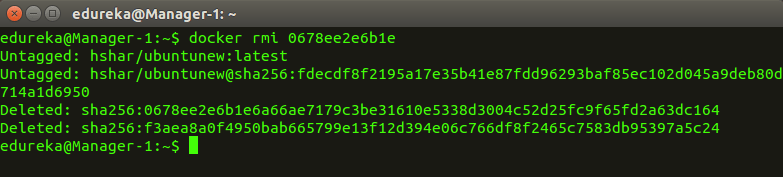

14. docker rmi

Usage: docker rmi <image-id>

This command is used to delete an image from local storage.

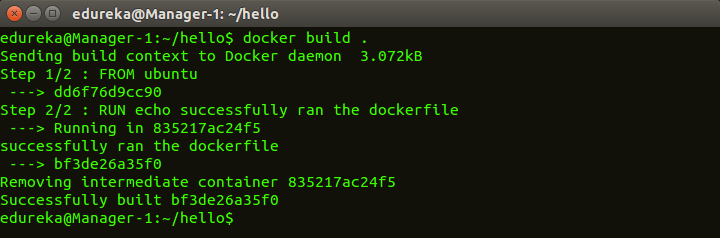

15. docker build

Usage: docker build <path to docker file>

This command is used to build an image from a specified docker file.

Use multi-stage builds

With multi-stage builds, you use multiple FROM statements in your Dockerfile. Each FROM instruction can use a different base, and each of them begins a new stage of the build. You can selectively copy artifacts from one stage to another, leaving behind everything you don’t want in the final image. To show how this works, let’s adapt the Dockerfile from the previous section to use multi-stage builds.

Example:

# syntax=docker/dockerfile:1

FROM golang:1.16

WORKDIR /go/src/github.com/alexellis/href-counter/

RUN go get -d -v golang.org/x/net/html

COPY app.go ./

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o app .

FROM alpine:latest

RUN apk --no-cache add ca-certificates

WORKDIR /root/

COPY --from=0 /go/src/github.com/alexellis/href-counter/app ./

CMD ["./app"]

You only need the single Dockerfile. You don’t need a separate build script, either. Just run docker build

The end result is the same tiny production image as before, with a significant reduction in complexity. You don’t need to create any intermediate images and you don’t need to extract any artifacts to your local system at all.

How does it work? The second FROM instruction starts a new build stage with the alpine:latest image as its base. The COPY --from=0 line copies just the built artifact from the previous stage into this new stage. The Go SDK and any intermediate artifacts are left behind, and not saved in the final image

No comments:

Post a Comment