Understanding AWS VPCs

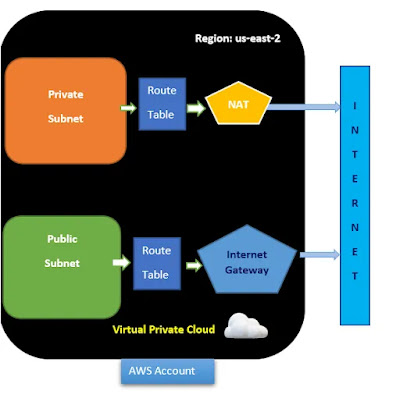

The AWS cloud has dozens of various services from compute, storage, networking, and more. Each of these services can communicate with each other just like an on-prem datacenter’s services. But, each of these services is independent of one another, and they are not necessarily isolated from other services. The AWS VPC changes that.

An AWS VPC is a single network that allows you to launch AWS services within a single isolated network. Technically, an AWS VPC is almost the same as owning a datacenter but with built-in additional benefits of scalability, fault-tolerance, unlimited storage, etc

Building the Terraform Configuration for an AWS VPC

The Terraform configuration below:

Creates a VPC

Running Terraform to Create the AWS VPC

Now that you have the Terraform configuration file and variables files ready to go, it’s time to initiate Terraform and create the VPC! To provision, a Terraform configuration, Terraform typically uses a three-stage approach terraform init → terraform plan → terraform apply. Let’s walk through each stage now. 1. Open a terminal on your vscode.2. Run the terraform init command in the same directory. The terraform init command initializes the plugins and providers which are required to work with resources.

terraform init If all goes well, you should see the message Terraform has been successfully initialized in the output, as shown below. Terraform initialized successfully Terraform initialized successfully